Imagine you developed a bot detector algorithm, a little program that could be deployed on every Twitter, Facebook, or Reddit comment to flag it as either real or made by a bot. Sounds incredibly useful, right?

But before you get excited and go mass-banning all the accounts detected as bots, you might want to check whether the algorithm, you know, works.

To do that, you need to test it out (ideally on a big ol’ sample of things you know were written by bots and things you know were written by humans) and see how well it does. If it spots 90% of the bots, that sounds pretty good. Even better if it spots 99%. That would mean our detector has a high sensitivity and we can catch most bots.

And surely if 99% sensitivity is good, 100% would be even better, right? A perfect detector!

I tell you what, I’ll let you in on a little secret: I already have a detection algorithm that flags 100% of bot content, every single time. No joke, I really do. Here it is:

Algorithm: for any given post, flag it as a bot, no matter its content.

There you go. Just flag everything. That will absolutely catch every single bot, 100% of the time. A detector with perfect sensitivity!

Sounds kind of stupid, though, right? We wouldn’t want to use that detector because even though it catches all the bots, it flags every single human as a bot also. Everyone’s comments would be removed.

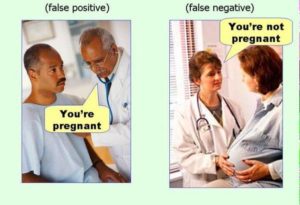

When judging whether a detector works, we need to think not just about the sensitivity, but also about how many false positives it generates! (A technical term for this is specificity: high specificity in a test means a low rate of false positives, i.e. of the human generated content, most is flagged as human and very little is wrongly flagged as bot).

A good detector should have useful sensitivity (not miss too many of the things you’re looking for) but also maintain a useful level of specificity. If there’s a high rate of false positives, or even if there’s a low rate of false positives but a lot of human content being tested, many or even most of our “flagged as a bot” results will actually be humans wrongly getting caught in the net.

Let’s try another example: imagine if we tested everyone in the U.S. population for a rare disease (1 in 1,000,000 have it), and we use a really good test with 99% sensitivity and 99% specificity. There are 330 million people in the US which means 330 of them have the disease (and 329,999,770 do not; remember, it’s rare). At 99% sensitivity, around 327 of our 330 targets will be found. Hooray! We can now treat them with our fancy and expensive new medical treatment.

But there’s an issue. Of the 329,999,770 who don’t have the disease, our 99% specificity test flagged 3,299,998 of them as having the disease (1% false positives is more than 3 million false positives).

So rather than ending up finding most of the 330 people with the disease, our test identified 3,300,325 as “tested positive for the disease” (and about 327 million as negative, including 3 people who actually have the disease). At this point we either have to apply our expensive treatment to all 3.3 million who tested positive (unsure which are the 327 that actually have it) or we have to admit that 99% sensitivity and 99% specificity may not be good enough, especially when most of the population we’re testing don’t have it.

Catching fake scientific papers

This false positive paradox (a symptom of base rate neglect) is a very basic aspect of signal detection that anyone working in medicine, neuroscience, or psychology should be familiar with.

Which is why I’m flabbergasted by a May 2023 story in Science about a new “detector” for fake research papers (i.e. peer-reviewed journal articles that are plagiarized, written by AI, use fabricated data/graphs, etc.). First a little background: yes, even in the modern scientific system of peer review, we have to deal with fakes. Why?

Academics often feel a great deal of pressure to publish articles in peer-reviewed journals; indeed, in many university departments it’s a requirement of the job and may be the main determinant of whether someone gets tenure or a promotion. Given this “publish or perish” system, it’s not surprising that some people may be tempted to take shortcuts and publish fake papers. With the recent and continuing improvement in generative artificial intelligence making it even easier to write fake papers, there’s no doubt this will be an increasingly challenging problem to solve for some time.

Why aren’t fake papers easily caught? For one, there’s an incentive by some journal publishing companies to crank out as many papers as possible. Traditionally, peer-reviewed scientific journals worked under a closed access subscription-based system where their articles were hidden behind an expensive paywall, requiring prohibitively expensive institutional subscriptions or outrageous per-article fees just to read a single article.

Recently there has been a big push toward open access publication: making the articles freely accessible to the public (whose tax money may have subsidized the researcher/institution that did the work in the first place!). Scientists generally love this — we want people to read our work and benefit from it. We don’t get paid when our research is published, so the real benefit comes from people seeing (and hopefully later using or citing) our work.

However, the publishing companies that own most of the respected scientific journals (and smaller “publishing” companies that own the dubious or made-up journals) have decided to institute open access by charging researchers to publish their work. Scientists may be offered an option: publish in the traditional closed model or allow your article to be open access if you pay a large fee (sometimes thousands of dollars per article).

While the move toward open access is undoubtedly good for science and good for society, this way of doing it not only costs the researchers who have to pay to publish, but it also leads to perverse incentives for publishers since they now make more money the more articles they publish. Higher quality science should mean rejecting poor quality research and sussing out fake papers, but publishers are less motivated to do that if it cuts into their bottom line.

So plagiarized or fake papers can be a real problem: some scientists feel extreme pressure to publish, even if they haven’t done good quality work (or any work at all in some cases!) while some publishers are incentivized not to look too closely at the tens of thousands of articles that pass through their journals each year.

Theoretically peer review as a system should catch these papers, though, right? After all, peer reviewers are other scientists working in that specialty who should know the field well enough to spot many of the fakes. It’s not a perfect system, but wouldn’t it catch most of the problems?

Paper mills

Well, one weakness in the way peer review is sometimes done is that journal editors — who are themselves just researchers in that field working as unpaid volunteers for the publishing companies — are the ones who select peer reviewers for submitted articles. A good editor knows who else does research on this topic and sends the paper out to highly qualified professionals (which, by the way, is also unpaid volunteer work by those researchers). Sometimes when an author submits their paper, they’ll include a cover letter to the editor that may include some suggested names for potential peer reviewers. This means that an editor who is lazy, or one who is working in an area a little too far outside of their specialty, or perhaps one who is themselves compromised might end up selecting peer reviewers who are not actually doing their due diligence.

Basically, sometimes you find colluding rings of authors who peer review for each other, serve as editors in questionable or fake journals (“paper mills“), and basically give the pass to publish work that is plagiarized or fake. Given the absurdly huge profit margins in scientific publishing, it’s no wonder that many questionable or fake “open access” journals have proliferated. According to a 2023 Propublica expose, even high school students looking to get into prestigious universities are entering the pay-to-publish paper mill racket. Thankfully, these paper mills are sometimes caught and shut down, but given the scale of scientific publishing, it’s hard to keep up.

Enter the fake paper detector. If we can develop automated tools to help spot fake papers, we can catch and hopefully close these paper mills, retract fake papers, perhaps even have professional consequences for those who have cheated the system.

A fake paper detector

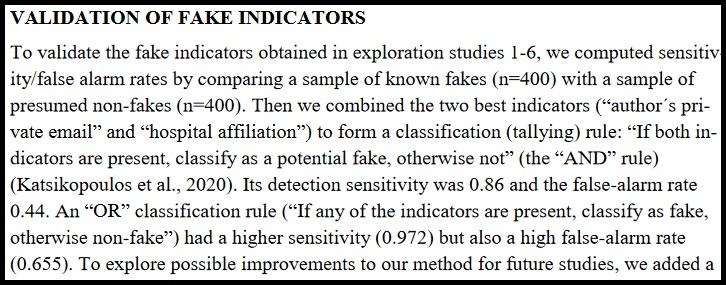

So in May 2023, I read this Science story about one such fake paper detector developed by Bernhard Sabel and colleagues (including the esteemed Gerg Gigerenzer, a well-known psychological scientist who works in judgment and decision making). The story was about their newly developed detector that looked for red flags of possible fake publications. The indicators they used to red flag papers were based on a questionnaire they’d sent to a sample of authors (some suspected of fake papers, and some not). Using these indicators, Sabel and colleagues tested some papers and found that up to 34%(!) of neuroscience papers from 2020 were faked or plagiarized, and up to 24%(!) of those in medicine were.

That sounds crazy! That should freak us out…if true. Yet what the Science story about this detector just glossed right over is that the detection tool was flagging papers based on just three simple things: the author uses a non-institutional email address, they have no international co-author, and/or they have a hospital affiliation. Just by looking for these simple tags, they red flagged potential fake papers.

Which sounds promising, and could be extremely helpful, if those indicators truly were indicative of fake papers and didn’t also apply to many real papers. But there’s the rub: tons of very legitimate papers in neuroscience and medicine involve authors affiliated with a hospital, working without an international collaborator, and/or using a non-institutional email address.

I myself used a private email address prior to getting tenure. Academics often switch between institutions many times, be it for graduate school, post-doc positions, visiting professorships, or simply changing schools; if you use an institutional email address when publishing a paper that may still be read and cited a decade later, then you risk having a defunct or inaccessible email associated with the paper.

Okay, so how did this new detector do when the authors tested it? They applied this “system” to hundreds of papers (400 known to be fake, 400 normal) and found that their system had a false positive rate of around 44% (or 66% when using just one indicator). Meaning it wrongly red-flagged real papers over 40% of the time.

Assuming there are more real papers than fake papers in scientific publishing (which seems very likely), this detection system is way, way worse than useless. Like our hypothetical disease detector above, this system would lead to an absolute flood of legitimate papers being flagged and having those false positives completely swamp and obscure the actual fakes it flags (they did have a sensitivity of 86%, or 97% if using just one indicator). Even with other combinations of indicators tested out on a smaller sample, they didn’t get the false alarm rate below 37%.

Algorithmic bias

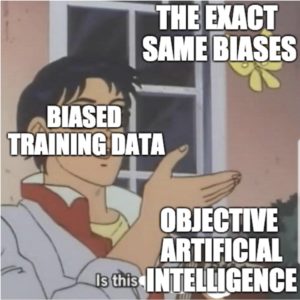

As Carl Bergstrom points out in this Fediscience Mastadon thread, the authors haven’t just proposed an unhelpful detector, they have likely created one that is biased against non-U.S., non-EU authors. As Bergstrom points out, the researchers made “a detector that they themselves show doesn’t work, and that we have every reason to expect disproportionately flags papers from Asia and the Global South–and then concludes that these area[sic] contribute the most fake papers.”

Thus, what we are looking at here is yet another example of algorithmic bias. We like to think that computerized systems are somehow more objective and fair than us capricious and biased humans, but when automated methods just instantiate and perpetuate our own human biases (even when it comes from a well-intentioned place), we are just making our biases systemic and cloaking them in the respectability of the objective 1s and 0s of a computer program.

Now, I don’t want to rule out the potential of using algorithms to detect possible fake papers. As I said before, fake papers are a real problem and work like that of Sabel and colleagues is one promising angle of attack. But detectors based on what a journal editor thinks is sketchy (like doing a project only with people in your own country) has significant issues beyond just the problem of false positives swamping true positives. The latter issue may be solved using more standard ways of developing detectors applying machine learning pattern-recognition algorithms to large samples of fake and real papers (and then testing the detector on a new set of fake and real papers that weren’t in its training set). This is closer to what we do with automated detection of fake news stories, and even those systems are still plagued by more false positives than we’d like.

But the former problem — algorithmic bias — may still show up even with a detector that has better specificity. After all, it may be that people who publish fake papers do share some minor indicators in common with a particular group of innocents (more than other groups), in which case whatever the false positive rate is, it risks flagging way more of those innocents than the actual fakers (assuming there are many more innocents than fakers and assuming the specificity isn’t 100% perfect). The false positive paradox can actually be behind algorithmic bias, even if no human biases explicitly went into deciding what the detector looks for.

Good intentions or not, we need to be mindful of the unintentional systemic biases we’re creating or perpetuating when false positives are more likely to occur for some groups than others.

References

Bergstrom, C. [@ct_bergstrom]. (2023, May 12). Finally, I want to stress that it is no defense whatsoever to say that the algorithm could be used simply as a preliminary screen [Mastadon post]. Fediscience.org. https://fediscience.org/@ct_bergstrom/110360115914104987

Sabel, B. A., Knaack, E., Gigerenzer, G., & Bilc, M. (Preprint, version May 8, 2023). Fake publications in biomedical science: Red-flagging method indicates mass production. https://doi.org/10.1101/2023.05.06.23289563 [PDF][Not Peer Reviewed]

Leave a Reply